GPT-4.1 Release: OpenAI's Focus on Coding Excellence

The chatgpt 4.1 release marks a significant advancement. OpenAI has enhanced GPT-4.1 to address coding problems more effectively. It boasts a 21.4% increase in accuracy compared to GPT-4, achieving a score of 55% on the SWE-bench coding test. The models now come equipped with improved debugging tools and can manage up to 1 million tokens simultaneously. Additionally, OpenAI has reduced the cost per query by 80%. Overall, the chatgpt 4.1 release is faster, more affordable, and more beneficial for coding tasks.

Key Takeaways

GPT-4.1 is 21.4% better at solving coding problems.

It can read up to 1 million tokens, handling big codes easily.

Better debugging tools help fix errors faster, saving time.

GPT-4.1 works with many coding languages, helping teams work together.

It costs 80% less than older versions, saving money for users.

Key Features of GPT-4.1

Enhanced Coding Capabilities

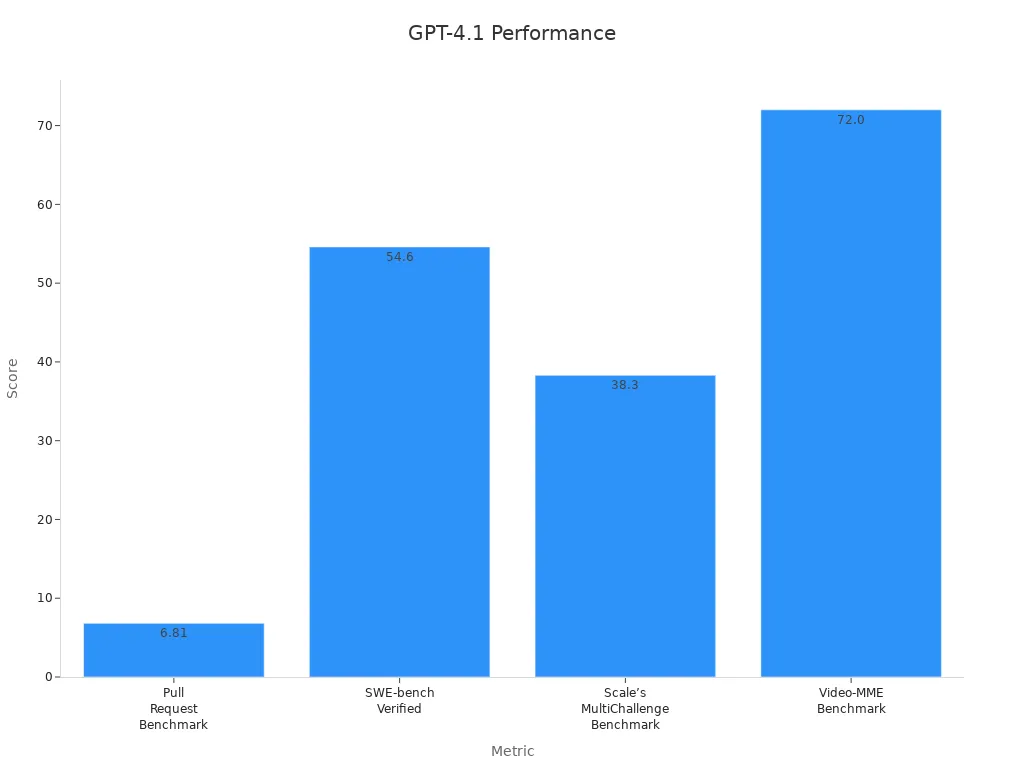

GPT-4.1 changes how coding models help with programming tasks. OpenAI improved its coding skills, making it better for exploring code and fixing problems. On SWE-bench Verified, GPT-4.1 scored between 52% and 54.6%, which is 21.4 points higher than GPT-4. This shows it can handle harder coding challenges with more accuracy.

GPT-4.1 is great at coding and following instructions. It helps with debugging, writing new code, and checking scripts quickly and correctly. OpenAI says GPT-4.1 beats older models on coding tests, proving it’s the best in the gpt-4.1 group.

Expanded Context Window

ChatGPT 4.1 now has a bigger context window, letting it process up to 1 million tokens at once. This upgrade is helpful for tasks like reading big codebases or long prompts.

Studies show detection accuracy changes based on where code is located:

0-25% of code: Best accuracy for finding all types of errors.

25-50% of code: Good accuracy, still finds all error types.

50-75% of code: Accuracy drops but errors are still found.

75-100% of code: Lowest accuracy, struggles with misplaced returns and small mistakes.

This proves the larger context window helps the model keep track of important details in long code. With this feature, you can work on big projects without losing key information.

Improved Debugging Tools

Debugging is easier with GPT-4.1’s better tools. It spots mistakes faster and gives clear solutions, saving time and effort. It finds errors in different parts of code, even tricky ones like misplaced returns or small counting mistakes.

OpenAI made GPT-4.1 more useful for debugging, helping developers and teams fix problems. Whether you’re working on a short script or a big project, the model follows instructions well and solves errors accurately.

Multilingual Coding Support

Coding often needs using many programming languages, and GPT-4.1 does this well. It works with lots of languages, helping developers everywhere. Whether writing Python, fixing Java, or using Rust or Julia, GPT-4.1 gives accurate help that fits the task.

A key feature of GPT-4.1 is its skill in handling many coding languages with great accuracy. It has an impressive 87.3% Global MMLU Pass Rate, proving it works well in different coding setups. The table below shows this success:

Metric | Value |

|---|---|

Global MMLU Pass Rate | 87.3% |

This high score shows how well GPT-4.1 handles different languages and tasks. It can understand syntax, fix mistakes, and even change code between languages.

For example, if you’re working on a project using Python and JavaScript, GPT-4.1 can switch between them easily. It helps keep your work consistent and error-free. It also supports programming guides in other languages, helping developers from different places work together better.

The multilingual skills of GPT-4.1 go beyond coding. It can read and reply to prompts in many human languages like English, Spanish, or Mandarin. This is helpful for global teams, making teamwork and communication easier.

With multilingual coding support, GPT-4.1 lets you handle complex projects across languages without needing extra tools. It’s a great tool for developers and businesses to save time and work more efficiently.

GPT-4.1 Model Versions and Uses

Standard, Mini, and Nano Models

OpenAI has three versions of GPT-4.1: standard, mini, and nano. Each version is made for different tasks, balancing speed, cost, and power.

Model | What It Does |

|---|---|

GPT‑4.1 | Best for hard tasks like coding and research. |

GPT‑4.1 mini | Medium-sized, cheaper, and faster, with 83% lower cost. |

GPT‑4.1 nano | Smallest, fastest, and great for text or sorting tasks. |

The standard model is best for tough reasoning, scoring over 90% on MMLU. GPT-4.1 mini is cheaper and works well for mixed tasks. GPT-4.1 nano is super quick, scoring 80.1% on MMLU. These choices let you pick the right model for your needs.

Custom Help for Developers

GPT-4.1 adjusts to your coding needs, giving custom help. It can debug, write, or improve code quickly and correctly.

If you work with many coding languages, GPT-4.1 can switch between Python, JavaScript, or Rust easily. This keeps your work smooth and reduces mistakes. OpenAI’s API also lets you add GPT-4.1 to your tools for custom coding solutions.

Real-life examples show how helpful GPT-4.1 is. Developers use it to stay focused and finish hard projects faster. By fitting your needs, it boosts productivity and makes coding easier.

Big Business Coding Uses

GPT-4.1 is great for big companies needing fast, accurate coding. It handles hard tasks well and saves time.

Metric | GPT-4.1 Score | Better Than Older Models By |

|---|---|---|

SWE-bench Verified | 54.6% | 21.4% higher |

Fewer unnecessary edits | 2% | Down from 9% |

Fewer extra file readings | 40% less | Compared to top models |

Fewer changes to unneeded files | 70% less | N/A |

Companies like Thomson Reuters and Carlyle have seen big improvements. Thomson Reuters improved document reviews by 17%. Carlyle sped up financial data work by 50%. These results show GPT-4.1 is a strong tool for big software projects.

Performance Benchmarks and Real-World Applications

Coding Speed and Accuracy Metrics

Speed and accuracy are very important in coding. GPT-4.1 improves both, making it great for software tasks. OpenAI adjusted the model to perform better, as shown in tests.

Benchmark | Score |

|---|---|

MMLU | |

GPQA | 50.3% |

Coding | 9.8% |

These scores show GPT-4.1 can handle tough tasks well. For example, its SWE-bench Verified score is 21.4 points higher than GPT-4. This shows it’s better at fixing, writing, and improving code quickly.

Companies have also seen big improvements. Thomson Reuters improved document review accuracy by 17%. Carlyle cut the time for financial data work by 50%. Windsurf reduced extra file reads by 40% and unnecessary edits by 70%. These results show how GPT-4.1 helps developers work faster and better.

Case Studies: Businesses Using GPT-4.1

Many companies use GPT-4.1 to solve real problems. Thomson Reuters added GPT-4.1 to its legal tools. This made document reviews 17% more accurate and faster for lawyers.

Carlyle, an investment company, used GPT-4.1 to handle financial data better. It cut data processing time in half, letting teams focus on important decisions instead of manual work.

Windsurf, a software company, used GPT-4.1 to improve coding tasks. The model found and removed extra file reads and edits, saving 40% of time on repetitive tasks. This made their team more efficient.

These examples show how GPT-4.1 helps businesses work smarter and stay ahead in a fast-changing world.

Benefits for Individual Developers and Teams

GPT-4.1 isn’t just for big companies. It’s also helpful for solo developers and small teams. Its ability to handle 1 million tokens at once makes it great for large projects. You can debug or write code without losing important details.

For solo developers, GPT-4.1 makes hard tasks easier. It helps write better code, find mistakes, and suggest fixes. This saves time and lets you focus on creating new ideas.

Teams benefit from its ability to support many coding languages. This makes it easier for team members using different languages to work together. The API also lets teams customize GPT-4.1 to fit their needs, boosting productivity.

Surveys show its impact. GPT-4.1 did better than others in 55% of pull request tests. Its SWE-bench Verified score of 54.6% is 21.4 points higher than GPT-4. It also improved by 10.5 points on Scale’s MultiChallenge Benchmark. These results prove GPT-4.1 is a powerful tool for all developers.

Competitive Positioning of GPT-4.1

Comparison with Other AI Models

GPT-4.1 performs better than many other AI models. OpenAI designed it to handle coding tasks with high accuracy and speed. It scored 54.6% on the SWE-Bench Verified test, beating older versions like GPT-4o and GPT-4.5. Its ability to solve tough problems makes it a top choice in AI.

Here’s how GPT-4.1 compares to other models:

Metric | GPT-4.1 | Claude 3.7 | Gemini 2.5 Pro | Grok 3 |

|---|---|---|---|---|

MMLU | 90.2% | N/A | N/A | N/A |

Global MMLU | 87.3% | N/A | N/A | N/A |

GPQA | 66.3% | N/A | N/A | N/A |

AIME2024 | 48.1% | N/A | N/A | N/A |

IFEval | 87.4% | N/A | N/A | N/A |

SWE-Bench Verified | 54.6% | N/A | N/A | N/A |

Performance in Pull Request Reviews | Outperformed in 55% of cases | N/A | N/A | N/A |

This table shows GPT-4.1 is better at coding and other tasks than its competitors.

Unique Selling Points of GPT-4.1

GPT-4.1 has special features that make it stand out. It can process up to one million tokens at once. This helps with big projects like reading large code files or fixing complex systems.

It also creates cleaner code changes, making it easier for developers to work. GPT-4.1 supports many programming languages, helping teams from different countries work together smoothly.

Here’s a table showing GPT-4.1’s strengths:

Metric | GPT-4.1 | GPT-4o | GPT-4.5 |

|---|---|---|---|

SWE-Bench Verified Score | 54.6% | 33.2% | 38% |

Aider’s Polyglot Benchmark | 53% | 18% | N/A |

Scale’s MultiChallenge Accuracy | 38.3% | 27.8% | N/A |

These features make GPT-4.1 a great tool for both small and big projects.

Cost Efficiency for Developers

GPT-4.1 is powerful and affordable. It costs 80% less than GPT-4o for API use. Even with lower costs, it still works faster and more accurately. Inference latency is nearly 50% lower, meaning quicker responses.

Here’s a table showing its cost benefits:

Feature | GPT-4o Pricing | GPT-4.1 Pricing | Savings per Query |

|---|---|---|---|

API Pricing | Higher | Reduced | Up to 80% |

Performance Metrics | Standard | Enhanced | Improved |

Response Times | Slower | Faster | Improved |

Choosing GPT-4.1 saves money and boosts productivity. It’s a smart choice for developers who want quality and efficiency.

Future Implications of GPT-4.1

OpenAI’s Vision for AI in Coding

OpenAI sees AI as a key tool for coding. They want to make AI easier and more useful for developers. With over 300 million weekly users and $529 million in ChatGPT revenue, OpenAI shows how powerful AI tools can be. Features like voice chat in ChatGPT prove their focus on user-friendly solutions.

OpenAI’s plans go beyond just helping programmers. They aim to let non-coders use AI to build apps. This means experts in other fields can create tools without needing to code much. You can focus on solving problems while AI handles boring, repetitive tasks.

Impact on the Software Development Industry

GPT-4.1 will change software development in big ways. Here are some trends to expect:

Fewer Traditional Programming Jobs: AI will take over many beginner and mid-level coding tasks.

AI-Only Software Companies: Startups may use only AI to build software faster than others.

New AI-Focused Jobs: Roles in AI safety, rules, and security will grow, creating skilled jobs.

GPT-4.1’s features, like smarter debugging and adaptive coding, will save time. It can cut development time by 40% and reduce bugs by 60%. This helps you create better software faster.

What’s Next for GPT Models?

The future of GPT models is exciting, with many improvements coming:

Better Efficiency: Future models will cost less and fit specific business needs.

Smarter Context: They will understand questions better and give more accurate answers.

Multimodal Abilities: Models will work with text, pictures, and other data types.

Personalized Help: They’ll learn your preferences and give tailored responses.

Generative AI could be worth $1.3 trillion by 2032. OpenAI’s focus on new ideas ensures future GPT models will stay essential for developers and businesses.

The ChatGPT 4.1 update sets a high standard for coding AI. Its improved models make solving tough programming problems much easier. OpenAI’s updates improve coding accuracy and lower costs greatly.

With nearly double the performance of GPT-4o and 39 new tests passed, GPT-4.1 shows it can handle hard tasks well. These upgrades make it useful for both developers and companies. By focusing on better coding and saving time, this version is changing how software is created.

FAQ

How is GPT-4.1 better than older models?

GPT-4.1 is faster, more accurate, and handles bigger tasks. It can process up to 1 million tokens, making it great for large projects. These upgrades make it more useful than earlier versions.

Can GPT-4.1 help find and fix coding mistakes?

Yes, GPT-4.1 is great at debugging. It quickly finds errors and gives clear fixes. Its tools make solving tricky coding problems easier and save time.

Does GPT-4.1 work with different programming languages?

GPT-4.1 supports many languages like Python, Java, and Rust. It also understands prompts in different human languages, helping global teams work better together.

How does GPT-4.1 help developers work faster?

GPT-4.1 automates boring tasks, reduces mistakes, and speeds up coding. It handles big projects well and gives helpful suggestions, so you can focus on creative ideas.

Is GPT-4.1 affordable for businesses?

Yes, GPT-4.1 costs 80% less than older models. It works faster and more accurately, making it a smart and budget-friendly choice for companies.

See Also

Creating an Adorable AI Tool for 2025 Success

Best No-Cost AI Website Creators for Developers in 2025

Discovering the Most Charming AI Options for 2025